Dictation and Siri for Vision Pro

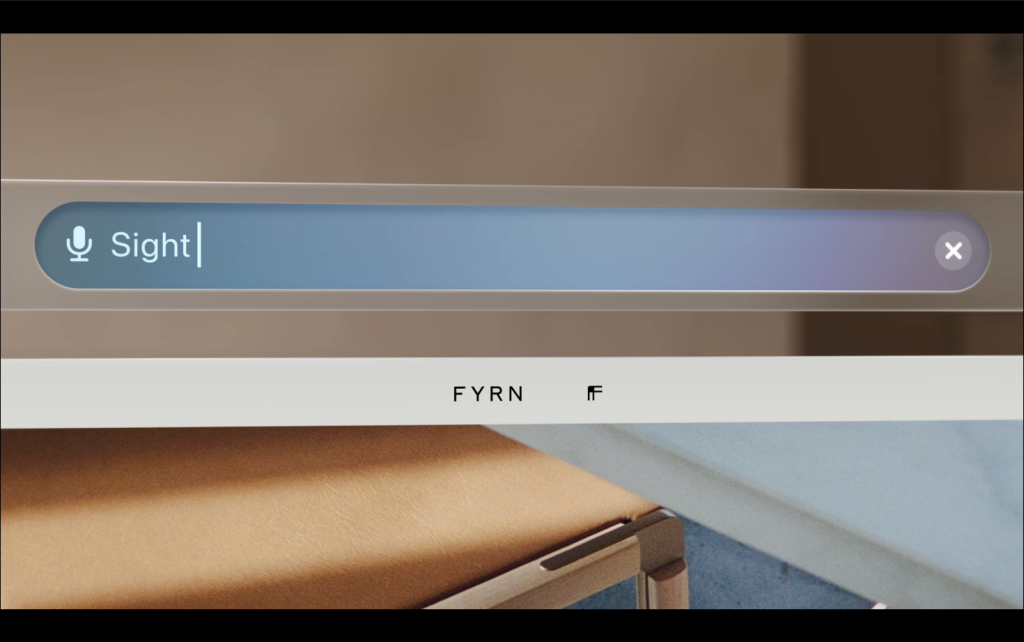

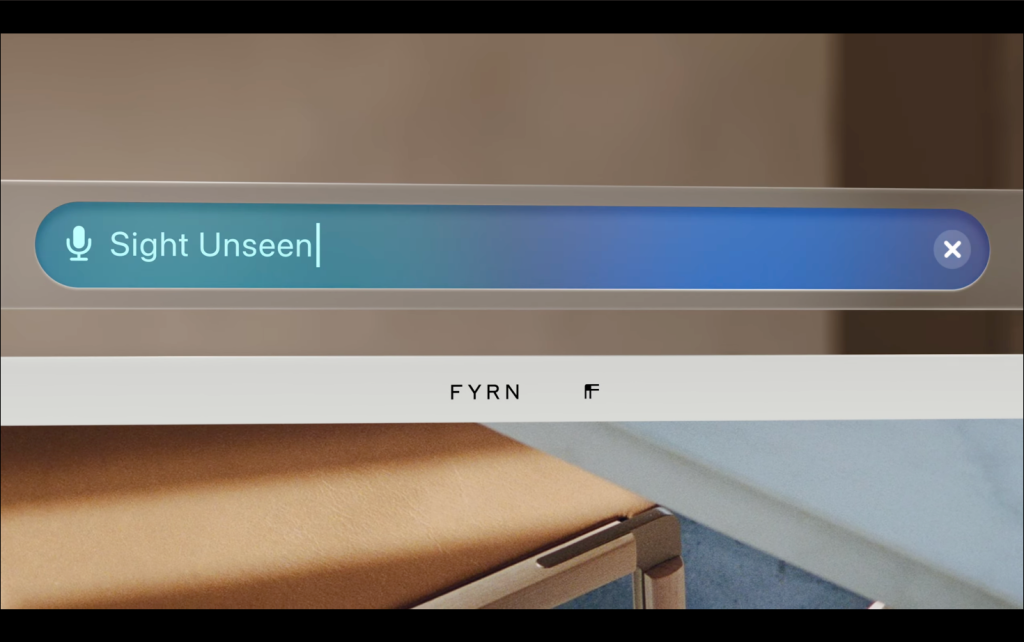

I worked on Dictation and Siri interaction designs for the world’s first true spatial computer, Apple Vision Pro. The eye-tracking capability of the device unlocks incredibly magical experiences, such as searching the web merely by looking at Safari’s address bar and speaking.

My contributions to Dictation included multimedia mockups illustrating how a machine-learned model trained on a stream of gaze coordinates could distinguish intended dictation events from incidental speech.

The visionOS behavior follows the precedent set by Safari Voice Search for iOS/iPadOS, but it’s even more fluid, in that you don’t have to activate a graphical element (i.e., a microphone button) before you can start speaking. You just look and speak.

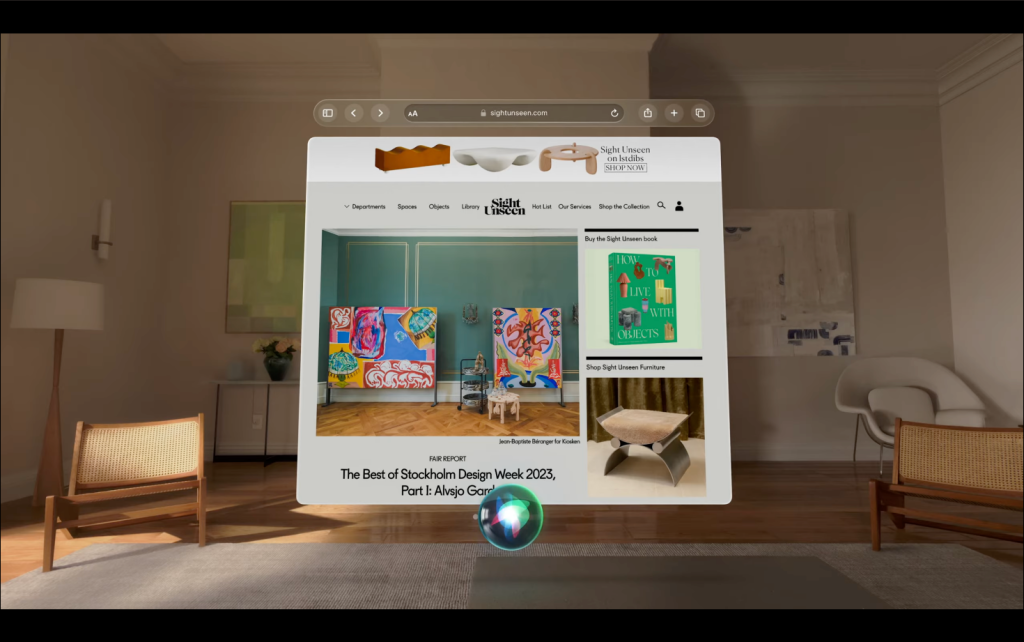

The Siri product also benefits from the superpower of gaze awareness, taking into account where you’re looking when you say commands like “add this to Up Next.”

My contributions to Siri on visionOS included invocation, listening, response and dismissal policy design, as well as multimedia mockups of Siri performing actions in apps, such as “share this with Tasha” while looking at a webpage in Safari.

My team certainly wasn’t the first to explore the combination of voice commands with a location-of-interest signal — check out this pioneering MIT Media Lab work from 1980 (!) — but I’m proud of what we accomplished. The industry is only starting to scratch the surface of what’s possible at the intersection of gaze, gesture and voice in virtual and augmented reality.