Multimodal inputs for voice commands (inventor, 2018)

Category

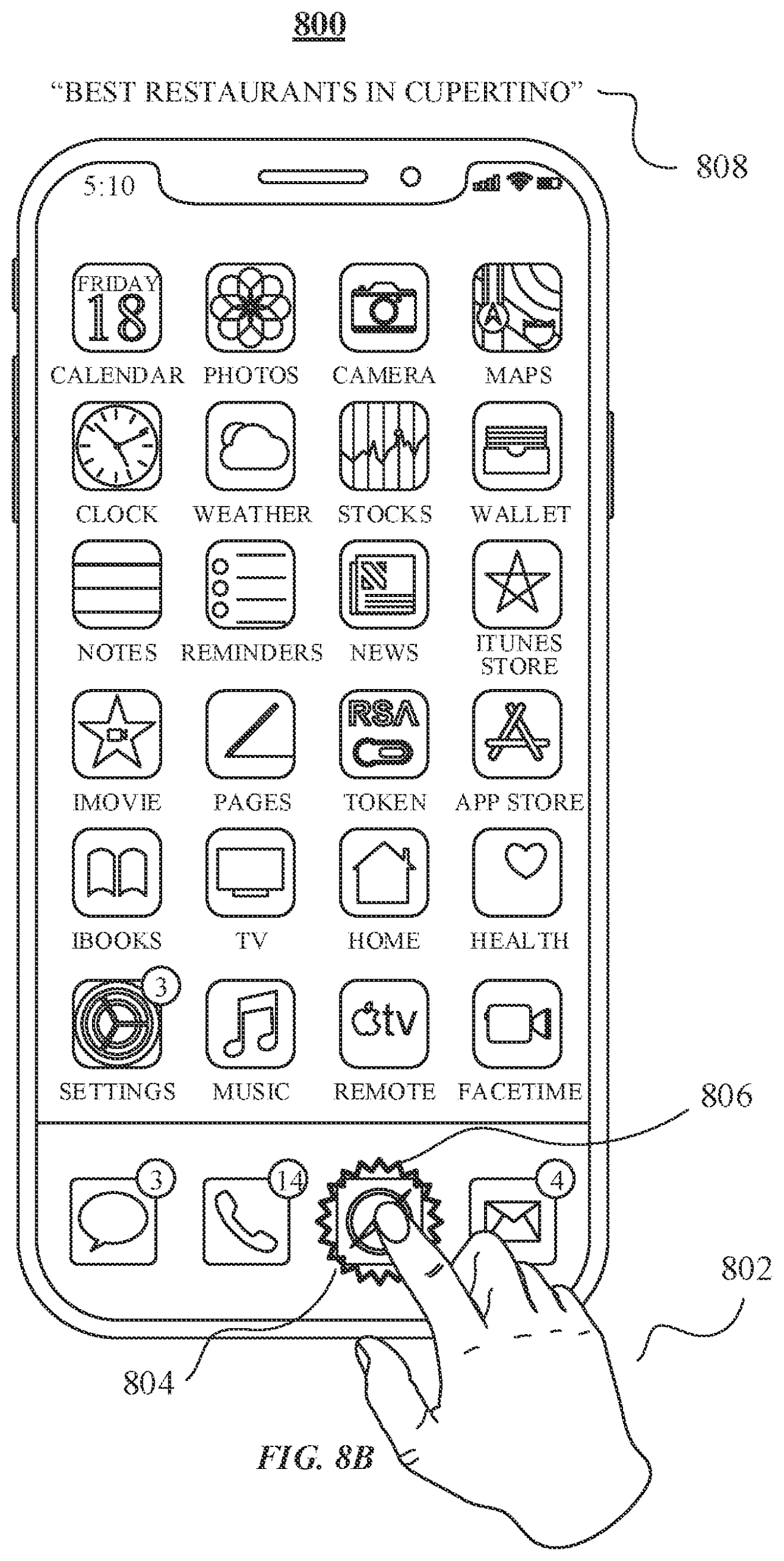

featured patents & publications, patents & publicationsPhilippe and I had birthed similar ideas independently, so we joined forces on a prototype and its accompanying invention disclosure. The concept is that the X,Y coordinate of a special touchscreen input gesture, let’s say touch and hold, can provide information to the voice command interpreter, causing it to the interpret the voice command or text payload in the context of the item or region that was touched.

Basically, think of your finger as “directing” your speech somewhere specific onscreen.

Original Filing: 26 December, 2018

Status: Granted

Amended Filing: 10 November, 2023

Status: Pending