Multimodal Platform Design

Listening and Responses

Above is a Sketch/ProtoPie mockup I made showing improved, more adaptive listening and response behavior for the Siri virtual assistant on iOS. Siri’s listening and turn-taking behavior were key areas of design responsibility in my last few years at Apple. (Disclaimer: the mockup includes a listening animation and some standard library elements created by other designers).

I advocated the point of view that a virtual assistant needs to be more than smart. It needs to be a good listener. This means active, adaptive listening, taking into account the hesitations, disfluencies, interruptions, and overlaps that happen naturally when humans talk to each other.

A virtual assistant needs to be more than smart.

It needs to be a good listener.

Assistant outputs should make use of the right sensory channel — visual, auditory, or tactile — for the situation, and visual UI changes should be subtle and incremental, rather than jumpy or jarring.

Customers expect buttons, tabs, and menus to look and behave the same way over time in a graphical app as features are added, and they (consciously or not) expect the same from the fundamental buildings blocks of a voice user interface. As a voice assistant gains features or knowledge over time, it should remain familiar in terms of how and when it listens or speaks, and how it structures certain key patterns of conversation, such as those surrounding authentication or payment.

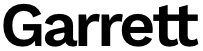

When to Speak

We expect and rely on more detailed, verbose spoken outputs when the virtual assistant is part of or paired with our cars, because we need to keep our attention on the road. However, the same level of verbosity might feel superfluous or annoying in situations where we can digest the same information more quickly by reading a screen.

The flow diagram below — which I made using a lightweight text-based diagramming tool called PlantUML — is an example of the kind of policy guideline I might craft for a voice or natural language assistant. To achieve the desired level of predictability for customers, traditional rule-based software may need to be layered around the statistical predictions of the AI model. Alternatively, a specification like this one could be fed to the generative model as a system prompt, to try to shape its inherently somewhat unpredictable behavior toward predictability and consistency.